Abstract

Informal natural language that describes code functionality, such as code comments or function documentation, may contain substantial information about a program’s intent. However, there is typically no guarantee that a program’s implementation and natural language documentation are aligned. In the case of a conflict, leveraging information in code-adjacent natural language has the potential to enhance fault localization, debugging, and code trustworthiness. In practice, however, this information is often underutilized due to the inherent ambiguity of natural language which makes natural language intent challenging to check programmatically. The “emergent abilities” of Large Language Models (LLMs) have the potential to facilitate the translation of natural language intent to programmatically checkable assertions. However, it is unclear if LLMs can correctly translate informal natural language specifications into formal specifications that match programmer intent. Additionally, it is unclear if such translation could be useful in practice.

In this paper, we describe nl2postcond, the problem of leveraging LLMs for transforming informal natural language to formal method postconditions, expressed as program assertions. We introduce and validate metrics to measure and compare different nl2postcond approaches, using the correctness and discriminative power of generated postconditions. We then use qualitative and quantitative methods to assess the quality of nl2postcond postconditions, finding that they are generally correct and able to discriminate incorrect code. Finally, we find that nl2postcond via LLMs has the potential to be helpful in practice; nl2postcond generated postconditions were able to catch 64 real-world historical bugs from Defects4J

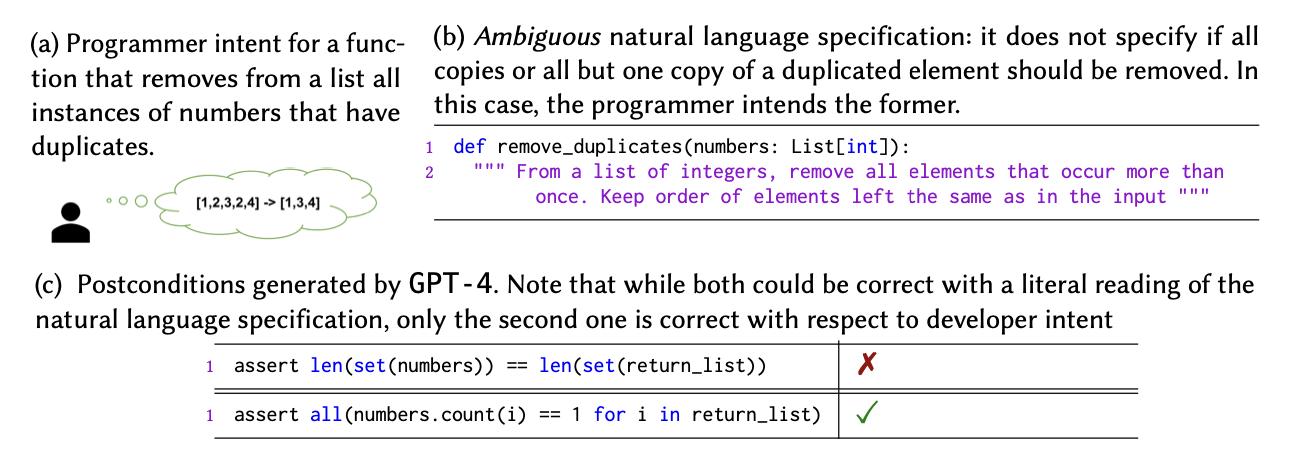

Postconditions Help Formalize Ambiguous User Intent

Example of how postconditions could be used to clarify ambiguous natural language specifications

Natural language specifications, often writen by users when interacting with an LLM via a prompt, are often ambiguous. Consider the exampe above, taken from the popular Python code generation benchmark, HumanEval. A programmer intends a function that removes all numbers with duplicates from a list. For example, given the list [1,2,3,2,4] the function should return [1,3,4] without the element 2 because it appears more than once. The programmer describes the function specification in a docstring. However, this natural language specification is ambiguous; it does not indicate if all copies of the duplicated elements should be removed, or if one copy should be retained. In this case, the programmer intends the former, however, it is not uncommon to expect that the program should fulfill the latter. Figure (c) contains two postconditions, each satisfying one of the two possible intents of the ambiguous docstring. The programmer writing the remove_duplicates function can verify that the second postcondition "assert all(numbers.count(i)==1 for i in return_list)” correctly matches their intent, by ensuring that all numbers in the returned list occur exactly once in the input list. The first postcondition, however, incorrectly asserts that return_list is a set of the input list numbers. In this example from HumanEval, it is not immediately clear at first glance what the user intent is. Generating such postconditions from natural language allows for checkable and unambiguous statements about a program’s intended behavior, formalizing a user’s intent about a program.

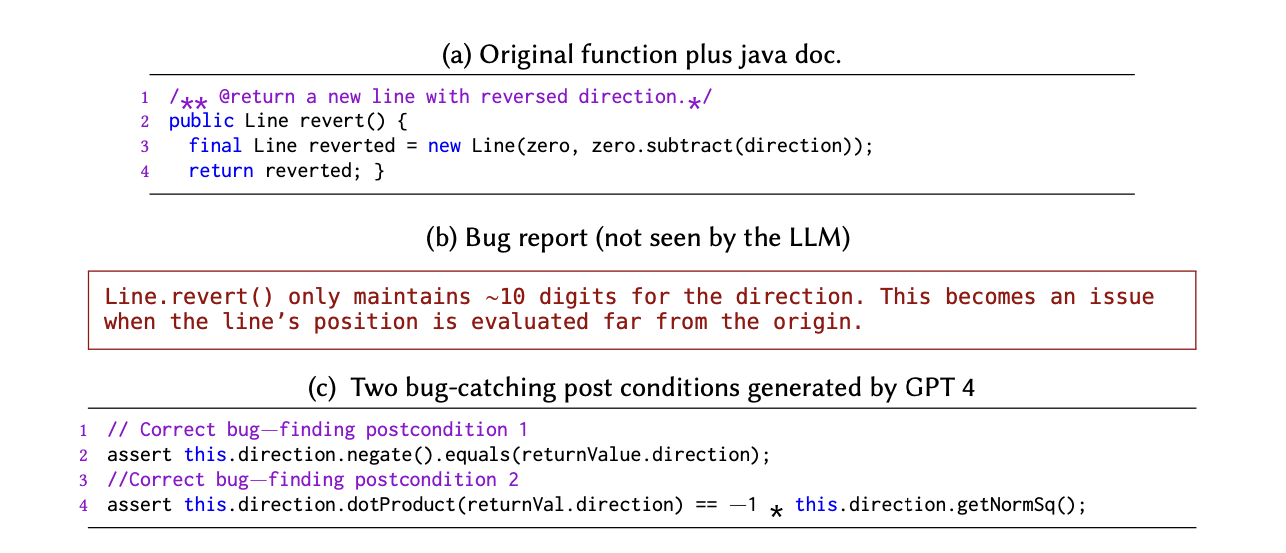

Postconditions Can Catch Bugs in Real-World Programs

Example of how postconditions or other formal specifications of program behavior could catch bugs. This example is a historical bug from Defects4J (Math-9): the Line constructor does not return a new line with enough precision. The postconditions were generated by GPT-4 in our evaluation, and both catch the bug.

In practice, postconditions generated in the target programming language can be used in assertions, as demonstrated in fig. 1c, to check the correctness of a function, enabling the early detection of bugs or violations of a programmer’s intent. The example in the figure above shows how formal specifications can be used to catch bugs in real-world programs. A bug from the Apache Commons Math project, the function revert() calls a constructor Line() that should return a new Line object with a reversed direction. The bug report 1 associated with the issue explains that revert() does not maintain enough precision, and fails in certain scenarios. Both of the provided postconditions in Figure c catch the bug by leveraging project-specific context and general mathematical knowledge about the specifications of a reversed line.

BibTeX

@inproceedings{

endres2024NLPost,

title={Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions?},

author={Madeline Endres and Sarah Fakhoury and Saikat Chakraborty and Shuvendu K. Lahiri},

booktitle={In Proceedings of the ACM on Software Engineering (FSE)},

volume={1}

year={2024}

}